It’s much easier to create a performant website from scratch than it is to improve performance on an existing website. But there’s plenty you can do with an older application that’s struggling. Luckily, we like a challenge at CookiesHQ. Here’s how we take our client’s projects from slow to… woah!

For the vast majority of websites, Ruby on Rails projects are still monolith applications where the front end and backend code are shared across the same codebase. As your project grows, your codebase complexity grows with it, and so does the amount of data you store and present to your users.

We’re not immune to it on our clients’ projects, and sometimes decisions that were valid a couple of years back might need a rethink when performance problems arise.

There are many Ruby performance optimisation guides on the web, and if you’re serious about improving performance and good practice, I recommend you read The Complete Guide to Rails Performance. It’s worth every penny, and so are the downloadable interviews that come with it.

Here’s our process for investigating performance issues at CookiesHQ, plus three examples of optimisations we’ve done for clients that had a significant impact on page load time.

Do you need some expert support for your existing app or website? Let’s chat

How to identify performance problems

When developing a web application, it’s easy to get used to the speed of your development machine, the low latency of a local connection and almost instant access to our disks.

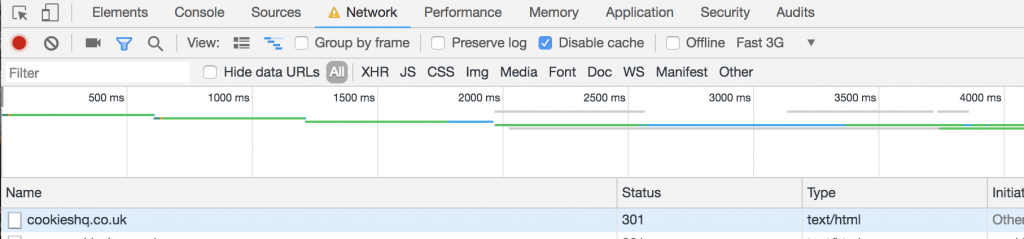

This is why I like to force ‘cache disable’ on my development machine and throttle the connection/CPU when my Chrome inspector is open. It’s certainly not a bulletproof way of testing for performance, but it certainly helps us catch the slower-than-usual pages. This allows me to experience the app in a less than optimal setup when debugging something or just testing the page.

If things seem too slow, we will then log an investigation card to find out what’s going on.

Since the setting only takes effect when the Chrome inspector tab is open, it’s easy to get rid of if it gets in the way. Plus, the surprise of finding a slower-than-usual page while debugging something else ensures it gets logged in our bug tracker for investigation.

Talking about bulletproof solutions, if you’re doing any kind of Ruby on Rails optimisation, a must-have gem is Bullet. For us, it’s a library staple that we install on any application that we have to work on. New or legacy.

Bullet will track both unnecessary eager loaded associations that cause more memory and database usage than required and missed eager loaded association that produce unnecessary calls to the database.

Now, these tools and tricks are just the tip of the iceberg when it comes to performance. You will only start to get real performance data when your application is live, accessible by users and under stress.

But for that you will need to get a logging agent to help you determine your baseline and your slow loading pages. For startup projects, we’re big fans of Heroku as our hosting infrastructure.

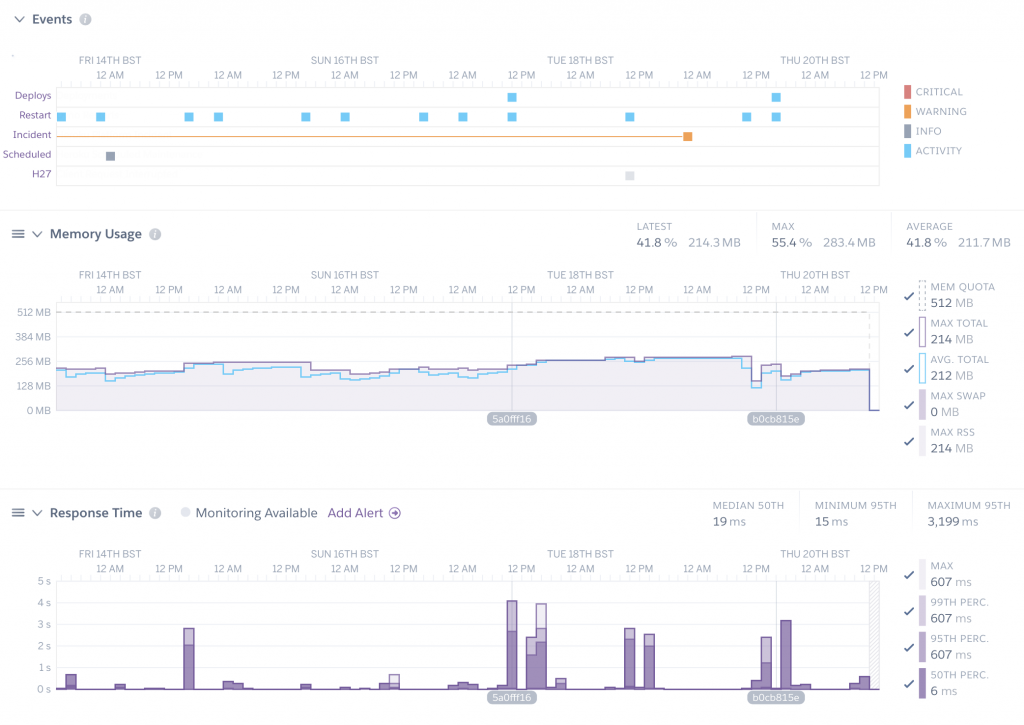

Heroku comes with some basic performance graphs and, while it won’t tell you in great detail which page, controller or action is potentially having performance issues, it can at least alert you when you go over a certain median app load time.

Following the services we love, if you’ve ever had to deal with New Relic, you’ll probably have the same love-hate relationship with the product that every non-devops person has.

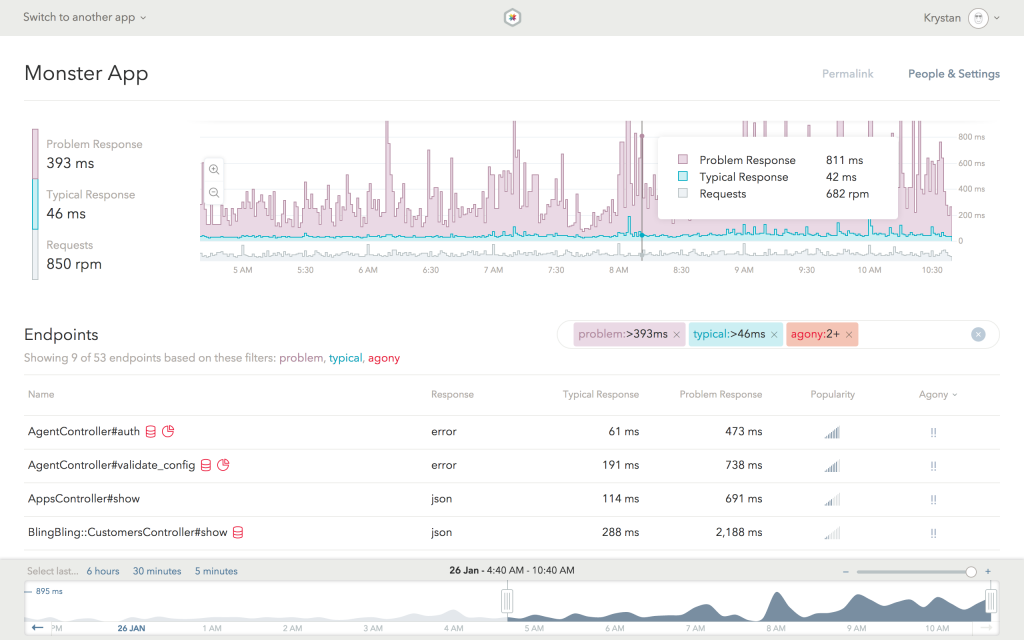

Thankfully the amazing team at Tilde Inc have created Skylight.io, an amazing drop-in library and web application that gives you all the details you need to track slow-performing pages and actions.

These apps are our bare minimum staples when creating a new application or taking responsibility for an older one. Armed with these tools, we assess the needs of each project and make recommendations to our clients regarding pages that need improvement.

Regular performance assessments are an absolute necessity for any project. A page could well be fast one day and slow eight months later because of the data it has to render.

Performance issue case studies

We’ve helped many clients turn a slow-performing Ruby on Rails app into a slick and fast one. Sometimes, the way we get there might be slightly unorthodox, but the results are the same.

Case study 1 – Patternbank’s design previews

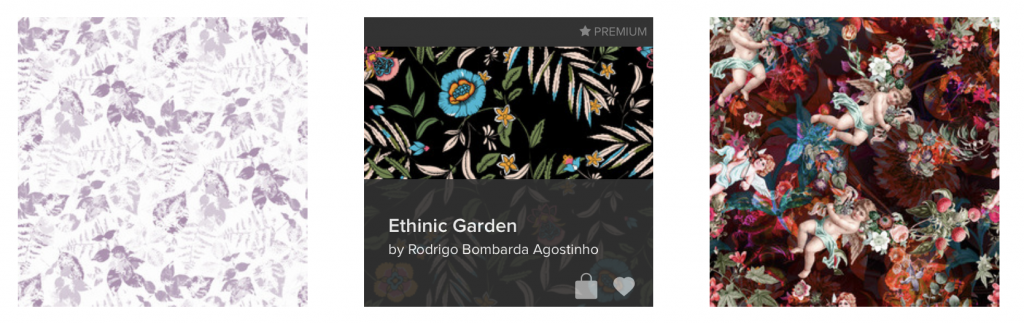

Patternbank is an online marketplace for fashion pattern designers. A marketplace loaded with useful tools for the designers and the buyers.

People tend to buy multiple designs in one go. They might have multiple tabs open when browsing the site and use basket and lightbox functionality as a ‘reminder’ or ‘moodboard’ for designs they like before they buy.

Across the site, designs are all presented the same way. A square snapshot of the design that provides the name of the design when you hover over it. It also displays a visual alert if the design is already in one of the user’s lightboxes or in their basket.

The first implementation of this feature worked well a few years ago when the website hosted fewer than five thousand designs. But when the website began hosting more than 50 thousand designs and an increased number of parallel users, we saw the rendering speed of those designs fall.

The problem was that – in order to know at load time if your product was in your basket, one of your lightboxes or both – we had to write some complex querying, which added a lot to the load time.

The load time for a filter page was peaking 2 seconds or more for some users. In local, with some heavy loaded (unrealistic) tests (a basket with more than 700 items for a user with hundred of lightboxes), we got to an average load time of around 7 seconds. Not ideal.

We were also aware that the current implementation was limiting our caching strategy since we couldn’t page design fragment results as they were presenting user-specific data.

The solution we came up with was to divide the rendering into two operations. First, we would render and cache design fragments, without any user-specific data. Remove the crazy queries trying to determine which icon to highlight for each product (basket or lightbox).

We went back to straight queries like ‘Give me all products within a category’. This allowed us to now cache all the design fragments across the application, helping the page load everywhere else.

But now we were missing the basket or lightbox highlight, which told you if the design had already been favourited and/or added to the basket.

This was our second step. After a result set rendering, we then leveraged JavaScript to highlight the relevant product icons by comparing the results list set of IDs and the cache user set list of product IDs.

Making this change took our local heavy loaded example down from seven seconds for a page load to a couple of milliseconds.

Sometimes, there is no (easy) way to improve your queries. Especially when user states are involved. Try to experiment with empty states, cache your most common responses and load non-critical user states in a separate thread. You’ll be surprised how much it can help your overall page load.

Case study 2 – Good Sixty’s complex time slots

Good Sixty provides a platform and delivery services to local independent retailers in the UK.

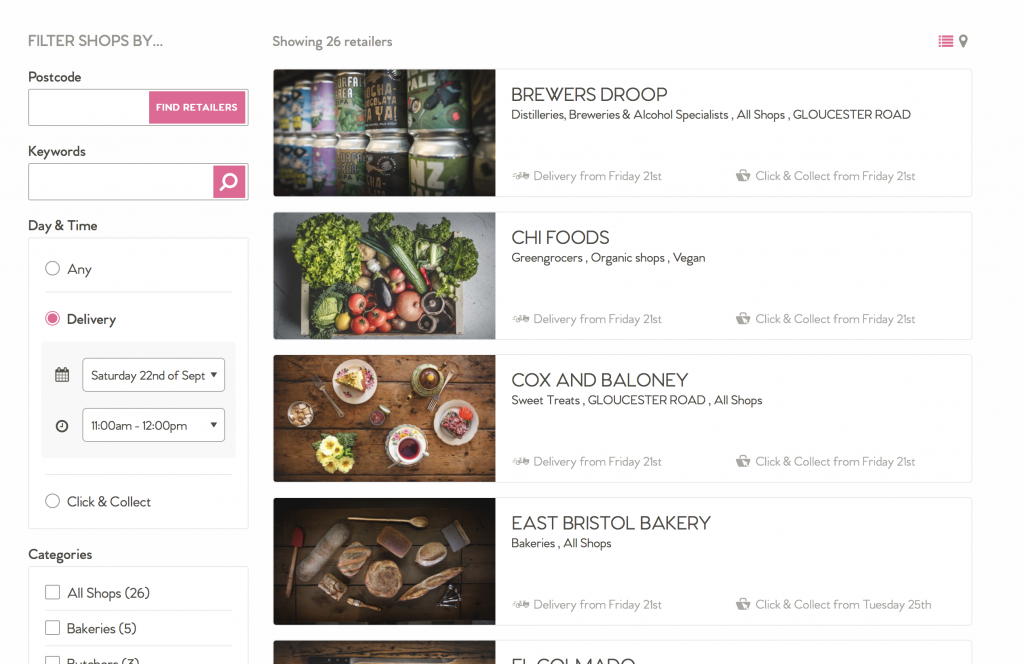

Retailers can list their products on their dedicated page, say when they can deliver or provide a collection service, estimate how long it will take them to prep an order and manage their store holidays. On the customer side, the user can search for retailers in a single interface, refining through multiple search criteria.

One of those criteria was causing performance issues – the ‘when’ of getting your goods delivered or collected (Day & Time).

In order for us to determine if a retailer can deliver you to you on Saturday 22nd of September between 11am and 12pm, we need to know a few things:

- How long does this retailer take to prepare an order? The preparation time could be anything between 1 hour and 48 hours.

- Does the retailer offer delivery or collection slots on that day and at that time?

- Is the retailer on holiday at that time?

Our early tests suggested that trying to bundle all this logic inside the search itself was going to introduce a massive performance hit. So we opted for a simple daily date cache.

On the shop representing model, we created a next_20_days_delivery_times as well as a next_20_days_collection_times , leveraging PostgreSQL text array stores. These arrays are lists of EPOC timestamps – easy to query and manipulate.

This list is refreshed daily, via a cron job, and this list takes into account any logic that goes into deciding if a shop can or can’t fulfil a delivery for a certain time.

Now, on the search side, the only thing we need to search for are shops where the next_20_days_delivery_times array contains ‘1537614053’ – the timestamp representation of our date. Something that databases are really good at doing!

Here again, we managed to avoid a massive performance bottleneck for a startup without ramping up a whole re-architecturing cost. We just store what we need daily, trust that point of reference to be correct and implement the feature for the end user.

Case study 3 – Database indexes aren’t just for Christmas

One app that we inherited in 2013 is a Ruby on Rails app coupled with Mongoid (the Mongo ORM for Rails).

Now, this application is a typical enterprise CRM and using the Mongo document system to store CRM-type data wasn’t the best decision for sure. But, since the project was already live and contained a lot of data (2 years’ worth of activity prior our takeover) we couldn’t entertain a move to PostgreSQL and committed to working with what we had.

Another library staple we use, when taking ownership of a pre-existing codebase is lol_dba. This gem will load your schema and try to identify which columns don’t contain an index and probably should.

‘Even though it’s fascinating to dive into the code and look for code-related performance optimisations, you sometimes have to be creative with your solutions.’

After maybe a year of supporting the Mongoid project, some of the reports were loading extremely slowly and some would even timeout (the page wouldn’t respond at all).

Given the document structure of Mongoid, we knew that eager loading wasn’t possible (at least not as efficiently as with traditional databases). But, by investigating the log of our running app, we could see an exponential number of queries happening every time we tried to access a nested or child record. Think listing a post and trying to fetch the author as well as the number of comments and the tags.

That’s when we learned that Mongoid offers the ability to index fields.

By combining correct indexes and some refactoring around how we would get the data, we managed to mitigate the problems. Now we’re back to our normal page load for the impacted CRM reports.

What we’ve learned so far

Even though it’s fascinating to dive into the code and look for code-related performance optimisations, you sometimes just have to be creative with your solutions. Mixing fast, cached server-side rendering and loading extra computational data with JavaScript, or just caching your hard-to-compute data on a daily basis, can do wonders for your app.

If you have an app that you feel is too slow or could be faster, drop me an email to talk through your options.

You could also put your questions to our panel of experts at Design/Build/Market: Performance Matters, on the 23rd October at The Engine Shed, Bristol.

Join us for an evening of talks from professionals in the fields of design, development and marketing, and explore performance optimisation techniques that can have a big impact on your business.